What is DPI & eDPI?

eDPI stands for ‘effective Dots Per Inch,’ which is a formula used to calculate the effective sensitivity of any given player, regardless of hardware or software settings. The formula is DPI * ingame sens.

In gaming, users can use many different hardware/software settings combinations. One user might have his mouse set to be extremely sensitive at the hardware level (which means he would have a high DPI; Dots Per Inch) with a very low ingame sensitivity, while another user might go for the reverse. eDPI is a handy tool to compare the actual sensitivity of different setups that takes all of these settings into account.

- DPI = Dots Per Inch; your mouse’s sensitivity on a hardware level

- eDPI = DPI multiplied by ingame sensitivity

- eDPI is used to easily compare ‘true sensitivities’ across different setups in the same game

- eDPI cannot be used to compare across games, since different games handle sensitivity differently

What is DPI?

DPI stands for Dots Per Inch. If you’ve got your mouse set at 1000 DPI it means that the mouse will ‘measure’ 1000 points of movement per inch that you move the mouse. The higher your DPI is, the more your cursor moves when you move the mouse. The higher the DPI, the more ‘sensitive’ the mouse, from a hardware point of view.

DPI is always set on the mouse itself or via software (see How to find DPI and change it). In general, the DPI of your mouse will determine how sensitive it is throughout your entire system. Almost all pro gamers use a DPI of 1600 or lower, since higher DPI settings can cause issues such as smoothing (What is mouse smoothing?).

Most mice have a DPI button located below the scroll wheel or on the underside of the mouse, allowing you to cycle between different DPI settings on the fly.

Note: In PC gaming, CPI (Counts Per Inch) is used intermittently with DPI. CPI is the same as DPI in this context.

Sensitivity

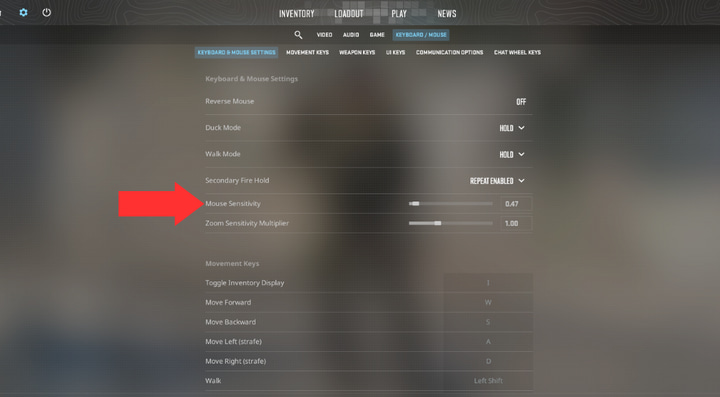

Sensitivity (or ‘sens’) is the ingame sensitivity setting. Contrary to DPI, this only applies in the game where you set it in, so you can use different sensitivities in different games at the same time. Different games also use different ways of measuring sensitivity, so ‘1’ sensitivity in game A won’t necessarily mean the same in game B.

Looking at DPI or ingame sensitivity on its own to compare is usually a bad idea. Player A can have his mouse set to 1600 DPI with an ingame sensitivity of 2, whilst player B can have her mouse set to 400 DPI with an ingame sensitivity of 8. These look like wildly different configurations, but in reality both players’ mice will be equally sensitive to movement. This is why we use eDPI to compare sensitivities across the same game.

eDPI

eDPI

DPI * Sensitivity

Find out how to find your DPI

Since gamers like to compare settings, gear, and so forth, and comparing raw sensitivity and DPI can get confusing we use eDPI when comparing ‘true sensitivities.’

eDPI stands for effective Dots Per Inch, and it’s calculated by multiplying the mouse DPI with the ingame sensitivity. This gives gamers a way of comparing the true sensitivity of different players, regardless of their hardware or software settings. Note that eDPI is game-specific: different games use different ways to calculate sensitivities, so eDPI is only useful for comparing actual sensitivities of different players within the same game.

As an example, we’ve listed three different (fictional) players using the same eDPI, with drastically different settings.

| Player | DPI | Ingame Sens | eDPI |

|---|---|---|---|

| Player A | 400 | 2 | 400*2 = 800 |

| Player B | 800 | 1 | 800*1 = 800 |

| Player C | 1600 | 0.5 | 1600*0.5 = 800 |

As you can see, these three players use very different setups, but if you were to test all three setups, you wouldn’t notice a difference in the actual, real-life sensitivity of their mouse setups. For this reason, eDPI gets used to compare effective sensitivities.

2.277sensitivity 3200 DPI

7286.4 what sens?

1600 DPI 47 sensitivity

Therefore 400 DPI what sensitivity

400 is a fourth of 1600 (1600/4 = 400)

So you need to increase your sens by x4 if you want to get the same eDPI at 400, since a lower DPI = slower.

Therefore: 47*4 = 188

400*188 = 75200 eDPI

1600*47 = 75200 eDPI

I think it should be 0.47*4 rather than 47*4, since I think the comment meant 47% rather than 47 times.

i already found my edpi now what do i do with it? im confused

You can compare it to other players, you can share it, or you can do nothing at all with it. It’s up to you; eDPI is just a means of comparing true sensitivities in a certain game, it’s not something that you have to know or anything.

having 1600 dpi and 0.589 in game, how much eDPI?

1600 * 0.589 = 942.4

having 800 DPI and 0.35 in game how much eDPI

800 times 0.35 = 280

having 800 DPI and 0.238 in game, how much eDPI?

800*0.238 = 190.4

Is 480 eDpi as fast as 480 dpi

If your in-game sens is 1, yes.

if i were to say had an 800 dpi and 0.65 in game. 520 edpi. If i changed my dpi to 1600 and my ingame sens to 0.325. Would my aim and feeling of sensitivity be the same or would it differ and be completely different from my original sens even tho the edpi is the same.

If you have the answer please respond to this comment

Different DPI levels can feel different so you might definitely have a different feeling when switching like that. That said: it should be rather easy to overcome, and a lot of people won’t even notice the difference.

Hey i have a question. Well my fotrnite sens is different then from every one just because my sens it shows for example 65,5 and when i look to my friends and youtubers and everyone i see they have dots(.) Instead of commas(,) like me please help

english uk and english us use dots and commas differently, its the same thing.

Well guys, this was for me the best guide to warzone settings ever!!! eDPI now 5000 and still lowering it, i have a different game now, life is much more easy 🙂

Also i did the graphic settings, its perfect nothing more to say.

Good job and i will follow the site if new games are up!!!

Thanks!!!

Hey, thanks for the kind words! That’s always appreciated. Love to hear that you’re bossing it ingame too!

Hi, just a question cause of warzone, at the moment i use sens of 25 and dpi of 1500, which means my edpi should be 37.500 if i understand you right. so i am much higher then the average.

If i would lower the settings to average, i have the feeling the players is moving and turning much too slow ingame, or said in other way, i need to do a much bigger difference on the mousepad to turn the player around.

I dont get it how pro´s can use those edpi setting and be fast in game – for me this is nearly unplayable with that low edpi.

Any help in this point?

It’s really a question of getting used to it. If you feel comfortable at you current sensitivity and you feel like you’re hitting all of your shots then there’s no real need to switch, but the reason all pros play at a ‘lower’ (at least when compared to what many players who are more casual use) sensitivities is because it allows them to have much more control over their micro movements. That said: aside from getting a mousepad that’s big enough to accommodate a lower sens (you’ll find that all pads that pros use are much larger than what you find on an average office desk, for example) it’s just a matter of getting used to it. After a while it starts to feel more natural.

Some people are fans of gradually lowering your sensitivity, which is something that you can try if you want, but I am personally more partial to the ‘cold turkey’ method where you just lower it in one go. That way you don’t have to get used to a myriad of different sensitivities.

Hope this helps a bit!

thank you, i was just wondering if the formula was correct but you also did the math so i dont have to do it anymore. I was talking about fortnite but what if i lower my y-sens to 3.2 and my x-sens stays at 3.5 with a dpi of 2300, whats my edpi then?

It’s kind of hard to calculate ‘true eDPI’ when you have different x and y sensitivities. If you go to 3.2 and 2300 you’d be at 5290.

so i play on a mouse with a costumizable software so i have a dpi of 2300 and my in game sens is 3.5 but i want to change mice and want the ninja air58 wich doesnt have a software so i want my dpi to 800 but i want the same eDPI so then i will have to first do 2300*3.5 =8050 and then i will have to do 8050/800 = ? If im wrong pls let me know

Your current eDPI is 8050. To get to the same eDPI you’d have to use an ingame sens of ~10.06. I don’t know what game you’re talking about (some don’t allow you to fine tune your sens behind the comma) but if you want it exact your sens would have to be 10.0625. With that said: something like 10.1 would definitely work as well: you get used to these super small changes fairly quickly.

i have a Zeus e2 from ibuygaming and the highest normal dpi is 3200 is that high or normal for pc players?

You don’t really need anything higher than 3200 DPI, however that mouse seems to have a max polling rate of 125Hz so that’s rather subpar for a modern gaming mouse.

Does this mean i have 75600 eDPI? I have 30 sense in R6 which translates to 36 (1.2*R6 sense for CSGO sense), and if I multiply my CSGO at 36 with my dpi at 2100 I get 75600?

Your calculation to convert from Siege to CS:GO seems to be a bit off. Your sensitivity in CS:GO would be 7.18. Your eDPI in CS:GO would as such be 16380.

Thank you for sharing such an amazing guide. As a gamer, I would recommend 1k to 1600 for MMO games, and for a shooter game, 400 would be enough. What are your thoughts about it?

Thank you for the kind words!

With modern sensors it actually doesn’t matter as much: we find that most pro gamers are using either 400 or 800 DPI, with 1600 being another very popular option. Most don’t go any higher than that though (and there’s no need to since you can scale your sensitivity in the game if you want a higher overall sens) so we’d say your recommendations are pretty solid.

Thanks man really appreciated.

Can u help me ? I was using 3.0 in game sens, and now that i’ve changed to 800 dpi, my sens is very low, i have to put 7.0 now.. whats wrong ? i see the pro players here using 0.75 with 800 dpi … HOW ?? it’s impossible to play like that… can u help me ?

I don’t know what game you’re talking about but pro players in general have a really low sensitivity compared to ‘casual gamers’. The average VALORANT pro, for example, has to move their mouse 47 centimeters to do a 360 degree turn in the game. It’s something you do get used to after a while.

Does your monitor resolution affect the DPI a player would use?

For instance, if I play a game at 1440p I might need a higher DPI since to move 100 pixels at 1080p would result in 1 inch of movement on my screen, while, 100px at 1440p might only be only 1/2 an inch on a higher resolution screen. Am I thinking about this correctly?

I ask because most pros are playing at 1080p and I play at 1440p, so if I use the average pro eDPI, I have to move my mouse a literal mile to turn around in-game which doesn’t seem realistic.

For reference, in Valorant, I play at eDPI 516 compared to average 277(I think it’s closer to 330) which is 1.86x(1.56x) higher and 1440/1080 is 1.33x higher which is enough to correlate the difference to me.

Side comment: the infographic posted yesterday on the /r/valorant claims that the average eDPI is 277 but I think the average is skewed low because of how the calculation and statistics are done.

It depends. For some 2D games it could make a difference but rotation in 3D games is usually based on angles, not pixels, so the game doesn’t really care about your resolution. That’s why CS:GO pros don’t have to change their sensitivity when they change resolution, for example. If you want to be absolutely sure you can always measure your cm/360 though. As far as the infographic goes: that was definitely the average sensitivity of players at that time. Our list and guides have been updated since last week, but even if we look at the median eDPI we can see that it’s on the ‘lower’ side at 251.

Can you answer me if the chipset driver version of a motherboard can influence the accuracy of a mouse? I tested a few different versions and I feel a little difference in my gameplay .. That much AMD Intel, I tested with several mice

I haven’t heard of that happening yet so I’m not sure if that should be possible, though you never know of course. Sorry that I can’t be of more help!

So what’s the best way to find sensitivity in a new shooting game? My friend told me use a ruler to measure the length of moving 360 degrees in the old game then use the same number in the new game.

Yes that is indeed a good way of doing things. Aside from that there are also a number of websites that convert your sensitivity for you (just google ‘sensitivity calculator’) so you can use those as a baseline and then measure your cm/360 to ensure that it’s exactly the same.